Yeah, The ******* Matrix.

My 20+ years old me was so excited when MUY people took me through this idea...

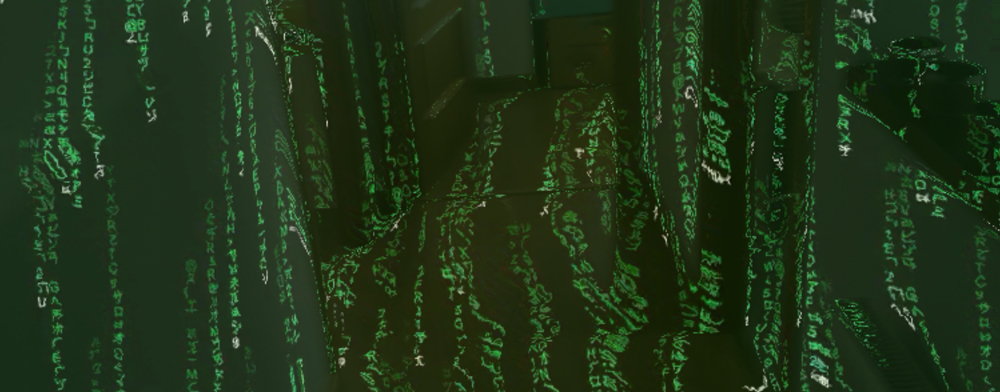

Take the famous digital rain, and make it realtime responsive.

In early stages we will need 2 versions. One for web focused in mobile/tablet and another version, which is the main one, for outdoor street marketing (billboards, bus stops, display truck, etc).

There are many different digital rains in the world. However, we wanted to create something outstanding, different, better! How? The answer looks pretty obvious: we required depth.

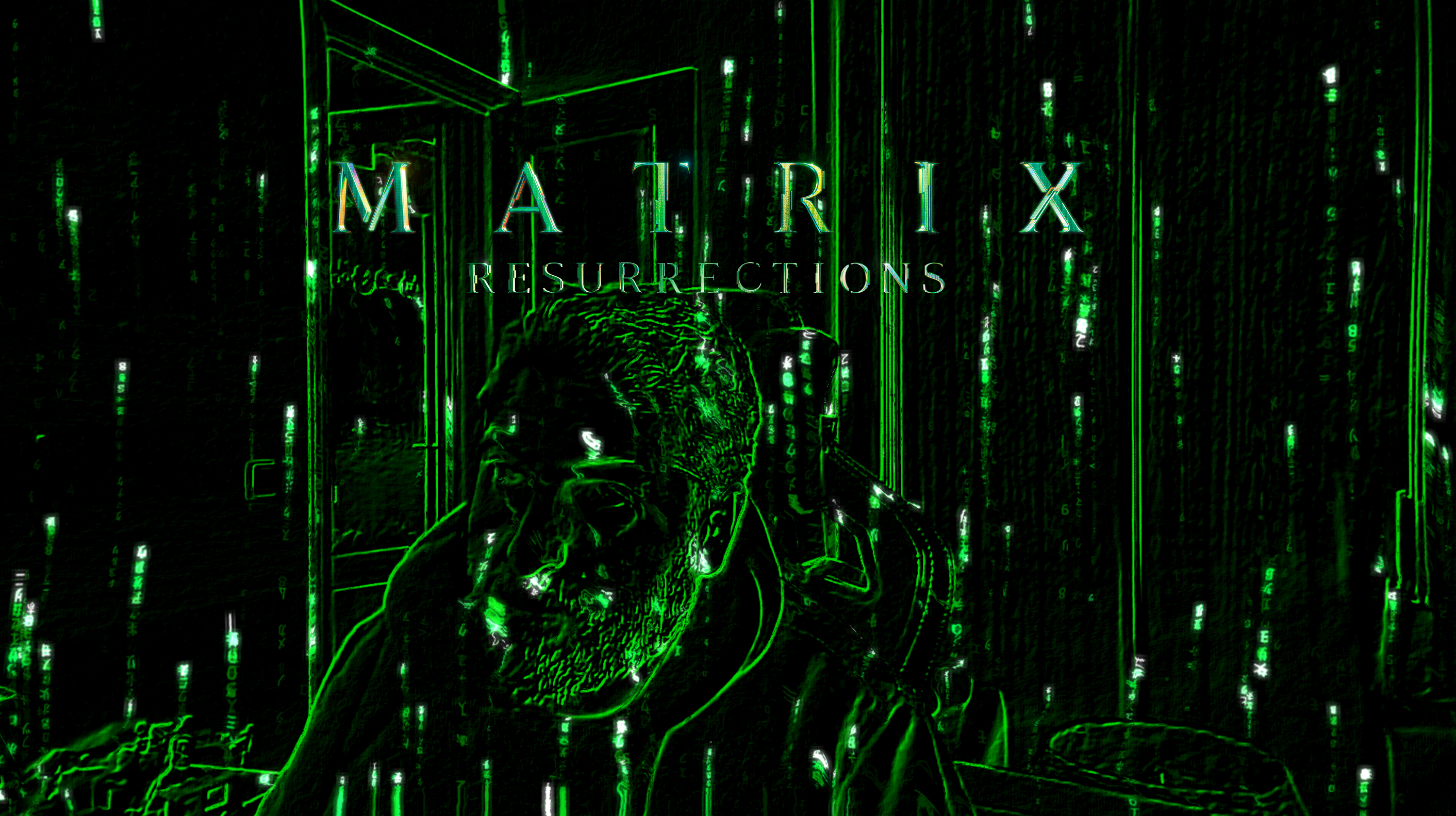

We started to explore the AR world and wonder how to take advantages of LiDAR cameras. Despite these new technologies look wonderful in the theory, the truth is that their development is quite complex and involve a lot of challenges and limitations.

Here we found an unsalvable wall, which was fortunately in an early process stage. LiDAR (affordable) cameras has very low range, up to 9ms maximum. Unusable outdoors, sad but true.

On the other hand, we were exploring the best way of developing the online side. We were excited about the opportunity to use the AR platforms (ARKit, ARCore), despite being constrained to modern devices.

This links our two apps, but the camera range problem was still limiting us. Time to change our minds?

Due to hardware limitations, we focused on finding software alternatives.

We've got pretty clear that the best way to made our toy was an AI Depth Library, and tried a few in Unreal Engine. Welcome depth map and welcome unlimited range, bye bye performance. Time to optimize...

At this point, with the web experience in mind, we tried some tensorflow depth libraries. Which resulted in worse performance results.

Bearing in mind that the difference lies in experience, the solution came with an old school computer's graphic trick. We could use 2D images to create a displacement map to distort the letters rain and get a fake 3D. We made a super simple test with webcam and a youtube digital rain video search used as texture. It looked good, usable and similar to our previous test with AI depth maps.

Using this option was full of advantages. We've got a lot of extra performance to glow our toy, we covered with one code the two sides (online, outdoor), it was flexible and really compatible with many different devices.

Luckily, digital rain effect needs to draw objects and edges (buildings, roads, etc) and in our project needed to draw cars, people, and different elements moving in real time. We needed this extra performance to prevent lag and get a good experience at all conditions possible (night, day, inside, outside, etc).

Even, in some outdoor displays we could add manually 3D planes with rain texture.

Finally, the project had a reduction of requirements. Online version was not needed, and we've got just one outdoor piece. We focused on this display and exported a nodejs app that we would have external control. This allowed us to fine-tune settings once everything was installed.

You can have a look how the final configurator works in this video.

I am trying to prepare a simpler version of the configurator to be able to share it with you.

Hope you enjoy!

muy

Lead developer. Technical direction. Fullstack development.

WebGL, PIXI Engine, PHP, AWS